To TELL

- thepadol2

- Apr 23, 2024

- 23 min read

To TELL is a tale about courtship in the era of mainframe computing, but TELL itself is also a feature that bears testament to a part and time of computing history that is known to a few fortunate people but today is by and large an almost forgotten era.

Ask anyone who is not part of the IT world, what is a computer, and the answer could be anything that is more about computing rather than computers themselves. Android as in an Android phone rather than an Apple iPhone? Cloud, as that's where all the computers are? The internet since computers no longer exist because they have become the internet? A PC not even knowing what it really is? IBM use to make computers and no longer does, having lost to Microsoft? All parts of some truth so maybe the answers aren't entirely wrong. A pity though, because how we got here wasn't trivial and neither where we are still going. The path of discovery, perseverance, choices, and failures has always been part of any evolution, technological or not. It's like saying if we need to do something, a thousand things need to happen first. Which path is taken is a consequence of many choices made earlier at different forks, knowingly or not.

Anyone of my generation that had anything to do with computer science will probably know some of the history, and would also appreciate the technical subtleties. Others, which is just about anyone else, this would simply be seen as a part of recorded history, and even likely with disinterest because of its technical nature. But as we look at today, we find computing technology literally embedded and pervasive in whatever we do. At times there is no choice - a simple analog phone to just call and talk to someone is almost a museum piece. We have even stopped saying making a phone call, as just saying call is enough to indicate a communication, in whatever form and means. Grandparents have adapted to mobile technology innovation to be not just in contact with the grandchildren, but literally to participate in their always on always and available connectivity. So recounting To Tell itself might not be extraordinary in today's context, but when placed in the context of its time the innovations that made it possible is extraordinary.

This context itself is an interesting story to relate when the right dots are connect, and to do so in a way that anyone can easily appreciate the course of this history.

Well, a complete history of computing is certainly available on the internet and besides being redundant to repeat, it is tedious and makes for boring reading unless you are really curious or a computer buff. Neither would a condensed version be any better. More entertaining is a quick dance tiptoeing on some of the time and aspects that could be appreciated for this tale in a so called layman's terms, or simply in relatable English.

An Opening

While Stanley Kubrick's 2001 A Space Odyssey in1968 might be easily remembered for HAL, the onboard computer that was almost life-like and that could interact verbally, the idea of a video call as shown by Dr. Heywood Floyd's call to his daughter was certainly just as exciting as a view of the future. Today an app like WhatsApp is ubiquitous in providing a seamlessly voice to voice communication, but even more that the call could be a video call just as easily without any major back bending to make it work. The text chat capability itself is taken for granted as being the bare minimum for such an app. Some people might still recall that chat was a well used and important communication mode on the PC prior to WhatsApp, and that it was available through different offerings and apps.

But a chat app goes further back in history and it is nothing more than a block of messages.

Indeed when I look back in time, out of curiosity of why or how certain things developed, I realize that what we see and experience is really just in the moment and the things we do and have are just whatever is within sight and reach. Most of the time none of it feels extraordinary especially when it is part of our daily lives and our work. Much like seeing the leaves around you and never actually seeing what the forest is really made of. It's just to say that my own experience with TELL, at the time, was simply to use it for the benefits it gave me, and I had no idea how it came to be, and even if I had the interest there was no "documented history" because at the time it was the current history.

Unintentionally I was looking no further beyond my own nose, the extent of my world in computing was what I had at my fingertips, and by and large although I felt fortunate to be exploring a new technology, I really had no appreciation of how unique it was and that it was pretty exclusive. Granted that at the pace computing was evolving, things changed rapidly but what, where, and what I was doing still remained the privilege of few.

Say punch cards and most could probably picture what they looked like. If you're old enough maybe you even worked with them. If you're young they are just something from the history of computing, ages or eons ago would be the perceived time frame. What people can envision though is simply a card with holes and that holes represent something, but they might only get half the story. Yes, it's easy to think of punch card as a way to input data as best exemplified by the US Census Bureau's need - the collection of vast amount of data that needed to be entered so that statistics could be generated. The aspect generally overlooked is that a stack of punch cards, basically thick paper, was infinitely cheaper than any other technology to store information. So in essence a stack of punch card is the equivalent of today's memory or USB flash drive, although with just a tiny fraction of the space. As a storage medium it was also perfect for programmers to store their programs and be able to re-enter into the mainframe without having to retype it all over again. The difference with the census data is that the punch cards for a program had to keep their sequence, and so to every programmer at the time at one point their worst nightmare would happen when for some unfortunate reason the stack of newly punch cards for their latest program would be spilled onto the floor. Re-ordering the cards was an impossible undertaking. Likewise just a single card out of sequence would invalidate the entire program.

Punch cards just didn't cut it to support an exponential growth on what we wanted and could do at the time. Much alike to telegraphy which works but is limiting. The advent of teletext made a significant impact in terms of efficiency and capability. Not even considering telephony which is a quantum leap. So a mainframe's full potential was exploited when many people could use it simultaneously, essentially sharing the processing power. How is a mainframe computer different from a personal computer is a common question posed, and the answer illustrating the differences abound, but at the end of the day they are basically the same conceptually. I would simply describe a mainframe to be many thousand PCs put together or that PCs are slices of a mainframe. In the late 70s, the early mainframes to the market by International Business Machines (IBM), System/360, were capable of running multiple tasks, and highly suited to specific uses. Interestingly, while the hardware architecture and technology was proprietary, IBM made clear through specifications and documentation how the hardware elements could be accessed and used, so that appropriate software could be designed by others to exploit the uses.

A New Chapter

1982, a new chapter in my life. The first real job. A new city with no friends, but family at least. The future beckons. Fearless? No, but it's a defining moment on how one starts anew in life's journey.

I had accepted a job with IBM, who would have guessed, but not in sales or marketing, and in Bangkok of all places. Bangkok as a branch office had no product or technology development, so a job as a systems analyst/programmer sounded close enough to being technical and as far as you could get from selling, Indeed, only IBM as the world leading corporation in Information Technology, had the means to render any branch office in any country literally on par with any other location - with a global standardization of values, practices, training, and the tools of the trade, computing included. It may have been a small branch office of 100 people, but there was a mainframe because it was the only way standard IBM wide in-house applications could be made available. The same mainframe obviously would have been overkill for just the internal uses so "time" was sold to clients wanting to process various data.

You might say "systems analyst?" and it would really be funny because you couldn't be faulted for thinking that it is an antiquated job role and hardly relevant in current times. I mean if I said programmer or even business analyst you would probably say cool and think yeah that 's still part of the current vocabulary in the IT industry. My job wasn't just a systems analyst straight up but also required programming as in PL/1 language in use by IBM for its business applications. In reality systems analyst still ranks among the top jobs in the IT industry, it just isn't a common role across all companies.

Truth of the matter was that while I made extensive using of computing at Rensselaer Polytechnic Institute (RPI), my alma mater, as part of my computer science studies, it was more along the lines of using a mainframe to understand programming languages, and to perform computational calculations for results. My role as a systems analyst at IBM was to deliver applications the branch office needed to operate its business effectively, and this was by analyzing the business needs, designing and implementing information systems to meet those needs. Internally for IBM this meant choosing company wide tools and customizing them for the specific location.

I was hired on an off cycle, kind of like a a walk-in candidate, so they hadn't planned an office space for me and for a while I had to make due with a desk in the hallway. It just happened to be next to the data center and it couldn't have been any closer to the famous saying of the glass house as the IBM mainframe was sitting behind a full height glassed in an area that my temp desk sat next to. I couldn't have been faulted for thinking that mainframes were ubiquitous in companies since after all, my six years at RPI were with a constant and easy access to computing, or better remembered as submitting programs in Fortran and other languages to then wait for results on computer printouts. The IBM Personal Computer had just come on the market and Microsoft Windows was still years away. So, as a systems analyst, using the mainframe was a fundamental part of my job and it felt just like a daily task rather than being something mysterious, exclusive, specialized, and literally beyond reach to most. Being a systems analyst with the mainframe as a tool of the trade was certainly well within my comfort zone at the time.

The Pieces of History a Few Years Prior

Today the mainframe to most remains mysterious and out of sight. Many even think it's extinct or at least a dying breed. Quite the opposite from the truth, but the future of the mainframe is not part of this tale. Ironically it started in basements due to size and today is still underground as exclusive community and mostly out of sight.

To describe TELL, a piece of mainframe history must be recounted. My six years at RPI, fifty years ago, I found a well established computing facility, and a very unique computing center in that it was housed in a former chapel that was styled as a cathedral. The Voorhees Computing Center was a combination of old and new. The power and capabilities didn't seem particularly exceptional at the time as most major US colleges and universities had mainframe computers as a computational platform and to support their administration, primarily in accounting, payroll systems, and enrollment. Instead the rest of the world in the late 70s was still largely unexposed to any real digital benefits, the only exception being the calculator which was embraced by many across industries and needs. Certainly major companies were using mainframes but it was only accessible to a limited number of people, just reams of printout reached the hands of staff. Smaller companies still didn't have the benefits of smaller so called minicomputers. What I had at RPI was there everyday and it enabled me to do many things as well as riding the wave of the "new thing". Seen from the outside this would have been a different planet.

The thing though, and I had no real appreciation at the time, was that the computing facilities and the mainframe services at RPI was both special and unique in many ways. This is where the tale starts as a backdrop to TELL. While as a freshman I submitted a deck of punch cards to run a program written in FORTRAN, I quickly progressed to using a cathode ray tube (CRT) with a keyboard, the famous green screen. How I interacted with the mainframe with this screen came to define what I presumed to be a normal way of doing things. The year was 1978, a lifetime ago.

Introducing Time-sharing

The pace of technological innovation has always moved quickly, forever accelerating. Without the implementation of time-sharing on the mainframe, TELL would not have been possible and without TELL this courtship tale might not have happened. But time-sharing itself wasn't a given, whether for technical challenges and internal politics. The history of computing is typically told from a hardware perspective as in gears, vacuum tubes, transistors, semiconductors, memory, storage, in a timeline of evolution and inventions. It's only half the story because the other piece, software, is equally important although challenging as a subject to describe in layman's terms. TELL is software. Time-sharing is software and also requires features in hardware.

So without time-sharing TELL would never have happened. What then is the relevance of time-sharing?

The early years of computing and its development was not a simple and linear process, it is littered with competing ideas, some revolutionary, some successes, many failures, and differences in view of what the future should look like.

The birth of modern computing starts with mathematics as this was the discipline that has dealt with the concepts of computational efficiencies. And the question is where were the mathematicians, and not just who were they?

The 1930's

The birth of modern computing in terms of the hardware implementation has its roots in the 30s of the 20th century with Alan Turing and his Turing machine model. From this there were several developments based on the concepts provided. What is somewhat forgotten is that research was not in a vacuum but thrived in specific communities that were part of universities, as an extension of existing mathematics departments, and places with a history of excellence, prestige, and innovation.

The years leading to the 1930s was a period of intense fervor with many intellectual giants among physicists and mathematicians, Albert Einstein, Robert Oppenheimer, Alan Turing, just to name a few instantly recognizable names. You could say that their main work was theoretical and theoretical research was in the academic domain with leading universities vying to have these geniuses at their place. These geniuses were both competitive in their attempts to publish discoveries first while still willing to pursue collaborations albeit with constraints. While the likes of Einstein are easily perceived by all for their contributions, the same was true for advancement leading to computing architecture but hardly anyone remembers the names of the figures involved. Instead, computing technology is much more associated with innovations from companies for commercial use, the best example of the era was IBM and the US Census Bureau, and even Amdahl for a cheaper but compatible mainframe alternative.

The history of computing is perceived to be complex by the general public, and it becomes even more complex when one understands a peculiarity in that there are actually two parallel historical paths, separate in some ways but at the same time forever intertwined,

Everyone knows the term hardware and software, and today everyone knows that a computer is such when both exist together. Few understand that computer technology development which characterizes the hardware, and computing science advancement on the software side, each were driven by different needs and interests. Quite a different mindset. Indeed the early development of mainframe was not simple, it wasn't linear, and it was full of contention and dissidence - just because the mainframe as hardware had certain objectives for the commercial use by companies, but at the same time the academic community that was focused on computer science advancement had a different perspective. Most people think that early computing development and advancement to be monolithic, as we see with Apple and Tesla in today's era. But no, IBM was driven by its customers whose immediate objective was a better automation and ways to replace human tasks that were structured or rigid. From this commercial perspective, IBM's toiled away at making machines that were faster and ways to handle input easily and quickly. At the same time, computer scientists who explored the new boundaries, were really defining new ways that computing could do things differently, and so while they had the same machines that IBM provided to other customers, they were defining and trying new architecture to improve and extend what was available, if not to revolutionize it completely.

Which way was innovation going to go?

The 1940s

At universities and associated centers of research, for mathematicians anything would have remained exquisitely theoretical even if they could be recognized as patents. Furthermore, whatever research they worked on had to be funded, and funding was never easy for activities that might not have a return. Then during WWII, many projects, some visible, many hidden or even secretive, needed the help of mathematicians, and therefore a new source of funding became available. While we subsequently learned about the involvement of some of the finest minds to advance cryptography while deciphering enemy codes, there were many other projects that funded various groups in universities, but never saw the results before the end of WWII. WWII caused an incredible war effort industrialization and also several areas of critical innovation requiring all types of research, and a key to these was the need for unheard of computational power to crunch numbers as quickly as possible. While the actual machines only became a reality after the war, the key scientists and mathematicians were by now heavily invested in this new direction. Some joined IBM and others became leaders in different university research centers in computer science.

The 1950s

The most famous result of all this was the Electronica Numerical Integrator and Computer (ENIAC) which most would recognize as a name and would become the poster child as the first computer of modern times. Maybe not too far from the truth. What escapes most people is that ENIAC was originally dedicated at the University of Pennsylvania because John Eckerrt, a foremost computer pioneer, and one of the creators of ENIAC, was at the University of Pennsylvania's Moore School of Electrical Engineering.

The mainframe general design as we know it today took shape in the decades following WWII. IBM made the fundamental jump to semiconductors commonly known as the transistor which enabled a significant reduction of size and energy demands while laying the groundwork for achieving higher performance. All this in turn provided more opportunities for the computer scientists to explore new ways that the fledgling mainframe hardware should be improved to enable innovative concepts of computing.

The Twist

IBM would have been satisfied just chugging along building faster and better mainframes - their engineers and scientists innovating electronics to make a better mousetrap for the same functionality. Their commercial customers were just looking to manage bookings in quantity and speed, to run their payroll in the least amount of time, and to do their accounting with the least effort. Interestingly though, amongst the commercial customers were the same universities that the computer scientists were exploring the very definition of computing. The universities had a "business" to run, the administration of the university itself from the registrar's office to finances, essentially the same as other commercial customers. But there was a difference. A mainframe was not a trivial investment and so from a university perspective it was unlikely that they would have invested in two separate mainframes, one for the administration, and the other student and academic studies. So these leading universities had a department dedicated to providing computing services for the entire campus, they were the ones responsible for making the best use of an expensive and exclusive resource. For the era, such groups had little need to hire outside of the campus and instead relied on competent people who came through the computer science program at the university itself. This meant the folks leading these groups, albeit just a service, really had an interest to be in lockstep with the academic side. They posed interesting questions and needs - how best to serve the university's academics in the computing area? IBM as well would benefit in providing mainframe capabilities to universities as their research would support IBM's own internal research and development. One must also remember that at the time a mainframe wasn't a purchase, it was more of a long term lease with maintenance and support included. It made sense as a mainframe wasn't a plug and play appliance, it required constant attention and specialized engineering skills.

The obstacle in the early years was quite simple. An early mainframe, powerful as it might be, performed by accepting what is known as batch job, think of it as the stack of punch cards which provided instructions. The result would be reams of printout. But it was one job at a time, one by one as they queued up. But from a mainframe perspective there was plenty of dead moments when nothing was happening, particularly in a 24 hour time frame. Or the opposite happened - one job simply took forever leaving many to wait for extended time.

The first important architectural shift occurred. The concept of multiprocessing took hold. This would allow multiple jobs to be handled at the same time from a user's perspective. The architectural shift happened early on and there was no question about its usefulness. It was a way of maximizing the power of a mainframe.

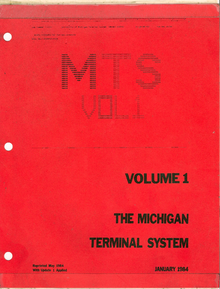

The 1960-70s and the University of Michigan

In this panorama of what to do, the University of Michigan (UM) academic Computing Center was at the vanguard. They worked closely with IBM engineers as they designed new features and functions that would make their services better and IBM went so far as to modify their mainframe hardware to optimize these features. Indeed some would not have been possible solely in the software of the operating system, some hardware change was required.

UM's academic Computing Center went further. Multiprocessing was fine for batch jobs, but they wanted a better interaction for the students and academics so that it was more immediate and in real time with the mainframe. They opted to pursue the idea to implement time-sharing as way to provide simultaneous access to the mainframe by all.

The Michigan Terminal System (MTS) was born.

Cloning, Duplication, Replication, Virtualization, are all terms that are at the heart of a mainframe and a PC is no different in concept. In simple words a PC is architecturally everything a mainframe is, just a fraction in size and power as well. So, conversely, everything a PC can or could do, is something that a mainframe already discovered and tried. Think of a PC as a miniaturized mainframe whose cost is so low that it can spend most the day being idle and not doing anything.

In principle, a computer or more precisely the brain known as the central processing unit (CPU), is nothing more than a glorified adding machine. Multiplication is nothing more than a lot of adding, and division a lot of subtraction. The magic happens because a CPU is capable of performing an incredible number of these operations per second. So while it takes an incredible number of instructions to create what we see on a computer screen and for it to be dynamic and alive rather than static, the magic of the CPU makes it look like it takes nothing to achieve it. And just to complete the picture, what about supercomputers? If a mainframe is, as an example, 100,000 times more powerful than a personal computer, then scaling further, in principle, a supercomputer is 100,000 times more powerful than a mainframe.

But it is this speed that enables any computer to perform the magic, in essence in a blink of an eye it can look like it is doing many different things at the same time, but it is just an illusion. A glorified adding machine, albeit very fast, is still only capable of one step at a time and therefore sequential at its core. The magic is created by dedicating a number "cycles" to different task in some order. It's like a chess grandmaster who plays 20 other players in the same tournament and we say it is simultaneous but it really isn't. The grandmaster is merely dedicating a specific time on one board before going to the next and eventually ending up in a round-robbin fashion. If the opponents didn't look they probably wouldn't have noticed that the grandmaster was sitting at someone else's board.

All this power but that is really only accessible by providing a packaged set of instructions (a program) and awaiting the results, is still a far cry what we consider to be computing today - a real time and interactive relationship. The concept of time-sharing in a so called conversational mode was already known, but to make it a reality wasn't exactly child play. IBM labored at it for a while but in the end gave up. Well, not quite. IBM's research center in Cambridge Massachusetts, unencumbered by commercial methods, more than made headway. In time they developed the capability that IBM would end up commercializing and to today remains a cornerstone of their mainframe offering. But in the late 60s and early 70s nothing had really stepped out the research facility, but the hardware capability was there and there were others seeking to exploit it, primarily the scientific and research community.

A consortium of US and Canadian universities collaborated together to design and develop a fully operational time sharing system using the mainframe hardware that IBM made available, the Michigan Terminal System. It was an incredible form of innovation but really exclusive to a rather small community. The consortium was comprised of eight universities, and RPI, my alma mater, was one of them. So when I was learning computing at RPI, MTS was already in use and I didn't think any more of it than just that it was the way computing was to be used. Little did I know or really appreciate at the time how exclusive, unique, and literally light years ahead of everyone else. Aside from the consortium, MTS was also used by Hewlett-Packard and NASA's Goddard Flight Center. For MTS you simply had an account for which you could logon on to the system where you had storage allocated and a number of commands to use, much like today's popular term "app". MTS at RPI remained in use for over thirty years.

By the time I joined IBM in 1982, IBM had just managed to commercialize the early version of their time sharing system, known as Conversational Monitoring System (CMS). It wasn't quite the same as MTS but easy enough to transition to, much like driving a different car with some different features. It wasn't alien to me at all. But again, considering the backdrop, there were literally only thousands of people using mainframes with IBM's CMS as a commercial installation, and in Bangkok only maybe two major banks had any installation with the capability. But at the IBM Bangkok branch office there was team of a dozen folks using it as part of their daily job as systems analysts, yours truly among them.

Most would not have even an inkling of what a courtship in the era of mainframes means, it just doesn't compute. Say courtship in the era of digital communications and possibly what comes to mind is email and the internet. Then with the arrival of mobile phones and with the capabilities of current day devices that are graphic and seamless, the notion of a chat as a form of anytime and anywhere non verbal is taken for granted.

TELL the feature and function

A courtship in the early days of digital communications when the notion of internet was still a lab experiment and harking back 40 years ago, would be an unlikely beneficiary. Today a digital chat can mean many things, and those of the younger generation, the idea of phone call is alien since we have the notion of constant connectivity. Even an app like WhatsApp renders the experience seamless moving between voice and text (and emojis). Those older remember when access to the internet via low speed modem still provided exciting ways of finding people online and numerous "chat" like applications.

The 1988 film adaptation, You've Got Mail, was a reflection of this change in relationship communications. But we can go back in time even further. Say mainframe and most would think something prehistoric, a dinosaur in analogy perhaps, large, cumbersome, not useful for personal use, or in simple words, beyond reach. The mainframe is not gone. It has evolved, perhaps ever so slowly, not because of being "old" or incapable of easy development. The truth is that it's basic structure and early development were so advance that they remain perfect today. The software part has merely evolved to take advantage of underlying hardware and its revolution.

An IBM mainframe runs an operating system capable of creating many virtual machines, the part that multi-processing innovation permits. An IBM Conversational Monitor System (CMS) is the part of virtual machine that provides an individual user the semblance of having their own computer, the part that time-sharing introduced.

On a virtual machine that is defined as a node, say we call it DALVM1, many user accounts may be defined on the system, say one such account is called JOHNDOE while another is KATEDUN. Between the two, text messages can be exchanged by simply using the command "CP MESSAGE" followed by the user account name. So "CP MESSAGE KATEDUN" works when the command is sent from JOHNDOE's active session.

Within IBM's S/370 networking at the time, nodes were connected to other nodes in a way that would look familiar to today's users of internet. Say there was another node known as TOKVM2 and a user account on that system called KEISAMA. JOHNDOE on node DALVM1 can communicate with KEISAMA by simply using the TELL command variant, similar to CP MESSAGE, but it would be "TELL KEISAMA at TOKVM2" and the magic happens. It's transparent and seamless even though DALVM1 could be running in Dallas while TOKVM2 could be a system in Tokyo.

That was and remains today the beauty that TELL with any user active on any system that the IBM network can reach, anywhere in the world.

A Courtship

At work I would use TELL to reach colleagues for information and found it convenient as it was real time but also asynchronous as the person didn't necessarily have to respond immediately.

Then without even thinking about it, it became a means to exchange messages in a courtship, somewhat like kids that would pass messages surreptitiously in a school class. This form of communication in the courtship felt special and exciting. We could have called each other with a phone, the kind with a rotary dial, but others would have noticed and listened in. Nobody was yet curious enough to try to look at another person's screen as reading small text would have been quite impossible from a distance. On travel for work to other IBM locations in different countries, TELL gave us the means to avoid costly international calls and remain connected throughout the work day. We even developed our own coded text to simplify certain phrases and be more efficient, a precursor to emojis. We had our own little private chat going on, oblivious to all around us. A dimension of romance that today would be mundane or simply assumed to be typical.

We probably weren't the only ones doing this, but I suppose a rarity and uniqueness at the time. Who knows if the relationship would have gone differently without TELL, most likely we would have resorted to homing pigeons.

What's in store for the future? Who's to say. We've been early adopters ever since of different communication technologies. We got a 1200 bps modem as soon as they became affordable and got onto internet email providers as they became available. We started using hardware adapters to get onto some rudimentary phones over the internet as a device. We embraced Skype as a usable video call system. While we did get iPhones early on, we never used Facetime as friends and family were using other apps as other folks were on Android phones. One thing though is already lost. In chats and messages, most of the exchanges are lost forever, the only exception maybe for forensic purposes in criminal cases. In the days of pen and paper, many letters of courtships have been treasured and conserved, allowing future generations a glimpse of how the past felt.

Perhaps at a future point of singularity, with the availability of "infinite" memory, a person's every single moment in life of experience will be forever "archived", and all that may initially take up the space of dot and then further scaled to just a single cell. At that point who knows how AI will change how we communicate? Will it be a filter or will it interpret? And of course, AI may have helped mankind make telepathy a reality? That's a bit of Asimov stretching it.

But for now just a simple closing thought. In relationships, the important ones, aren't just made of physical interactions, a two way communication is the essence. We all talk and listen differently. How our train of thoughts work and transform into words happens in many ways and sometimes we are even tongue tied. A letter, a chat, at times gives us the opportunity to communicate better and fully. A mode and approach that should never be underestimated.

IBM and RPI, a long and outstanding relationship

I am honored that one is my alma mater and the other the only enterprise I worked for.

Over the years many distinguished RPI graduates joined the ranks of IBM in important roles, both technical and managerial. In this special relationship IBM has made numerous significant technology available to the institute, not least the most current one being the recent Quantum Computer dedicated in 2024. For years, Dr Ann Shirley Jackson, 18th RPI President and US National Medal of Science recipient, was on IBM's Board of Directors. Likewise the ranks of RPI's Board of Trustee has seen IBMers over the years. Currently represented by John E. Kelley III, Class of '78 Chair of the Board of Trustees, and retired IBM Senior Vice President for Research and Development, and Dr. Dario Gil, IBM Senior Vice President and Director of Research.

In March of 2024 IBM and RPI unveiled the latest computing advancement of this important relationship - deployment of IBM's Quantum System One. Curtis R. Priem, Class of '82, Vice chair of the RPI Board of Trustees, designed IBM's Personal Computer first graphic and the co-founder of NVidia, was a major contributor to the project.

This might bring out a "wow!" from most people, and yes making a computer based on quantum mechanics commercially is no simple feat, but coherent to the context of "To TELL", a quantum computer is still a classical computer. What? Sounds outrageous but a quantum computer is not a better supercomputer or that it doesn't adhere to the Turing Model, it's just that the circuitry is implemented differently. It can work with certain types of algorithms more effectively while a classical computer would take forever. Still, in principle, what classical computer can do, so can a quantum computer, and vice-versa.

A quantum computer brings value to RPI because it will advance research in many different ways that a supercomputer would be slow to achieve, and RPI already has IBM's supercomputer.

Comments